May 13, 2020

AI For Beginners

Autonomously Moving Agents

*Full Course*

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Intro

I completed the full course for Autonomously Moving Agents within the AI Unity Learn course and am just covering it all in this blog post. I broke each individual tutorial into its own section.

1: Seek and Flee

They start a new project and scene with this project where there is a robber (NPC) and a cop (player controlled), and the robber will be given logic to satisfy seeking and fleeing in relation to the player and the terrain obstacles.

Seek: Follow something else aroundFlee: Move away from a specific object

Seek follows logic they have already used for following an object. Using the difference in positions between two objects, you can generate a vector which gives the direction between them and use that to guide an object towards the other one. Similar logic is actually used for Flee, except they just use the opposite of the vector generated between the objects to inform the agent of a direction directly away from a specific target. Since most movement done currently in these projects is target based, they just add the agent’s position to this vector to actually create a target to use in the desired direction.

2: Pursuit

Pursuit: similar to seek, but uses information to determine where the target will be to decide on pathing, as opposed to just its immediate location

Mathematically, this uses information on the target’s position, as well as it’s velocity vector to determine the target location. This information combined with the npc’s information on its own speed allow it to determine a path which should allow it to intercept its target some time in the future assuming a constant velocity.

Conditions they are adding for varied movement: If player is not moving, change NPC behavior from Pursue to Seek If NPC is ahead of player, turn around and change Pursue to Seek

They again use the angle of the forward vectors and vectors between the two interacting objects to determine the relative positioning of the NPC and player (whether the NPC is ahead of the player). They use a Transform method, TransformVector, to ensure the target’s forward vector is accurately translated to world space to compare with the NPC’s own forward vector in world space to get a proper angle measurement.

3: Evade

Evade: similar to pursuit but uses predicted position to move away from where target is predicted to end up

Since it is so similar to pursuit, implementing it in the tutorial could basically be done by copying the pursuit logic and just changing the Seek method to the Flee method (which effectively just sets the destination to the opposite of the vector between the agent and its target position). They do it even more simply by just getting the target’s predicted position and straight away using the Flee method on that position.

4: Wander

Wander: it is the somewhat random movement of the agent when it does not have a particular task; comparable to the idle state of standing

Their approach for wander is creating a circle in front of the agent where a target location is located on the edge of this circle and moves a bit along the circle to provide a bit of variety in the agent’s pathing

Important Parameters: Wander Distance: distance from agent to center of circle Wander Radius: size of circle Wander Jitter: influences how target location moves about circle

5: Hide

Part 1

Hiding requires having objects to hide behind. Their approach is to create a vector from the chasing object to an object to hide behind, and extend this vector a bit to create a target destination for the agent to hide at. A vector is then created between the hiding agent and this hiding destination to guide pathing.

The “hide” objects are tagged “hide” in Unity, and are static navigational meshes. They created a singleton class named World. This would hold all the hide locations. This does this by using the FindGameObjectsWithTag method to find all the objects with the “hide” tag.

They mention two ways to find the best hiding spot. The agent can look for either the furthest spot or the nearest spot. They decided to use the nearest spot approach.

Hide Method

Goes through the array of all the “hide” tag objects gotten in the World class and determines the hiding position for each of them relative to the target object it is hiding from. Using this information, it chooses the nearest hiding position and moves there. The hiding position is determined by creating a vector between the target the agent is hiding from and every hide object, and starting from this point, it adds a bit more in the same direction so that it is some distance behind the hiding object and the hiding object is between the target and the agent.

Part 2

The hide vector calculations use the transform position of the hiding objects, which is in the center of the object generally. This means setting the agent some distance away from the center should vary because objects can be different sizes.

To consistently get a position close to an object regardless of its size, they use an approach where the objects have a collider and the vector passes fully through this collider and beyond. It then produces a vector from that position coming directly back in the opposite direction until it hits the collider again. This position where it hits the collider is then what is used as the position for the agent to hide in. This is done because it is much easier to determine where a ray or vector first collides with a collider than where it leaves a collider.

The new method they created with this additional logic is named CleverHide(). Combining this logic with the NavMeshAgent tools in Unity can require some fine tuning. The main factor to keep track of on the NavMesh side is the stopping distance, as this is the distance the agent has to get within the actual destination to be good enough for the system to stop the agent.

They added a house object to test the system with more objects of various sizes and it worked ok. It was interesting because the agent wouldn’t move with significant moving from the target player, so sometimes you could get very close before it would move to another hiding position. I am not positive if this was another NavMesh stopping distance interaction or something with the new hiding method.

Finally, for efficiency purposes they did not want to run any Hide method every frame so they looked to add a bool check to see if the agent was already hidden or not before actually performing the Hide logic. To check if the agent should hide, they performed a raycast to see if it could directly see the player target. If so, it would hide, if not, it would stay where it was at.

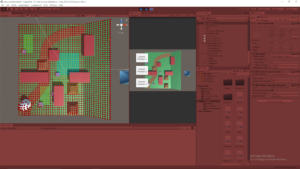

6: Complex Behaviors

Complex Behaviours: combining the basic behaviours covered so far to give decisions for the agent to determine how it should act. The first combination they do is choosing between pursue (when player target is looking away) and hide (when player target is looking at them). The looking check is done with an angle check between the target and the forward vector.

They also added a cooldown timer to update the transition between states since it led to some very weird behavior when hiding with the current setup (the agent would break line of sight with the target immediately upon hiding, which would then cause it to pursue immediately, and then hide again, etc. so it would just move back and forth at the edge of a hiding spot).

7: Behavior Challenge

Challenge: Keep pursuit and hiding as they are Include range If player is outside distance of 10, agent should wander

My approach: I added a bool method named FarFromTarget() that did a distance check between the agent and the target. If the magnitude of that vector was greater than 10, it returned true, otherwise it returned false.

Then in Update I added another option of logic after the cooldown bool check to see if FarFromTarget was true. If so, the agent entered Wander and else it performed the similar logic before with the checks to perform either CleverHide or Pursue.

Their approach: They also created a bool method, but they named it TargetInRange(). Following this, they did the same exact process I did. They checked for TargetInRange within the cooldown if statement check, and performed Wander above all else, then it checked with else if it did the other previous logic (CleverHide or Pursue).

Summary

All these behaviors are actually relatively simple on their own, but as shown in the final tutorials, combining these with transitions can create interesting and seemingly complex behaviors. This type of design would work very well with the Finite State Machine systems covered in earlier tutorials (as well as others I’ve researched as well). State Machines are also very nice to ensure the isolation and encapsulation of the individual types of behavior.