Category: AI

AGrid System Breakdown for A* Pathfinding

May 5, 2020

A* Pathfinding

AGrid System Breakdown

AGrid class:

– this class sets up the initial node grid

1) Determining grid size and resolution

– Input: nodeRadius – parameter to create a nodeDiameter (which is the “real world” size of the nodes)

– Input: GridWorldSize – determines an x and y distance for the grid to cover (in real world units)

– broken into GridWorldSizeX and GridWorldSizeY

– Output: number of nodes (node resolution) – System uses these GridWorldSize values and the nodeDiameter to determine how many nodes it needs to create to fill this area

– i.e. Inputs: GridWorldSize x = 10; GridWorldSize y = 10; nodeRadius = 1

– Output: Creates grid that is 5 nodes (2 diameter size) by 5 nodes (2 diameter size)

– Note: diameter size is a bit misleading since they aren’t circle shaped by any means; most dimensions are used in a more rectangular fashion (so diameter is more like edge length)

2) Positioning Nodes in Real World Space

– The transform of the empty object this AGrid class is attached to is used as the central real world location for the grid

– This value is then used to find the bottom left corner location which everything builds off of

– Vector3 worldBottomLeft = transform.position – Vector3.right * gridWorldSize.x / 2 – Vector3.forward * gridWorldSize.y / 2;

– starting at the central point, this moves to point which is half the total width in the x direction, then down half the total height in the z direction

– The first node created goes at this bottom left corner, then it places nodes that are spaced nodeDiameter apart from this location continually until it fills the grid

– It fills in an entire column, then moves to the next one across the grid

– Finally, each node starts at some arbitrarily placed y-value for its elevation and casts a ray until it hits the terrain

– Each node uses this information to finally place itself at the height where its ray intersects the terrain

3) Adding Values to the Nodes

a) Raycast Values

– During the raycasting process, the node can pick up on either: obstacles or terrain types

– Obstacles: tells the node it is unwalkable, so it will be ignored for A* pathfinding logic

– Terrain Type: Can add inherent excess movement costs based on the type of terrain to that node in particular

b) Applying Influence

– The AGrid class receives information on any Influence class objects placed in the area

– Using this information, it then calls the ApplyInfluence method of all the Influence objects found to add their values to the proper nodes

4) Blending Values

– *Excess that may not be needed

– There is a step to blend the movement penalty costs over the terrain, which basically keeps hard value borders from existing

– i.e. If Nodes have penalty value “A” near Nodes of value “B”, the nodes in between will vary between the ranges of A and B

– This currently only applies to movement penalties, but could be extended to other values if it seems useful

Architecture AI Cost Calculation Class for AStar

April 27, 2020

Architecture AI Project

Building Architecture Value to A* Cost Class

Math for Calculating A* Cost with Architecture Value and Agent Affinity Integration

I needed to translate agent affinities for architectural elements and their interaction with the architectural values themselves found in the nodes throughout the A* grid into cost values to integrate them with A*’s pathfinding logic. With this I wanted to create a class that is constantly available to contain all the math and constraints for dealing with this cost translation.

Class MathArchCost

The class MathArchCost was created to fulfill this purpose. It is a public class with a public static instance to make it readily available for other classes to acces it to perform calculations or check for minimum and maximum values. I decided to go this route instead of a directly public static class overall because I wanted to make an instance of this object that I could edit in the inspector during building time to tweak and test values. I may investigate routes of editing a truly static class in the inspector in the future.

Relationship Between Agent Affinity and Architectural Value

I was looking to make a system where agents that high a very high affinity for a particular architectural element would be drawn to those nodes with that architectural value type, and more drawn to it the higher its architectural value. Similarly, I wanted a very low affinity for an architectural element type to drive them away from nodes with that type of architectural value, and even more so from those with high values in that type. These different types can be examples like: window, light, narrowness, etc.

To solve this, I decided to look into using a basic formula that takes an input of agent affinity and outputs a “cost per architectural value” value. This way high affinities would create very low or highly negative rates (because lowering cost is what persuades an agent to move to a node) and low affinities could be associated with high cost rates. Incorporating it with a rate helps influence the idea that the more of an architectural element in a node will have a greater impact on the overall cost.

Default Architectural Cost

Architectural cost is the resulting cost on a node for a particular agent accounting for their affinity and the node’s architectural values, which is then incorporated into the overall cost calculations for A* pathfinding to determine how “good” the agent perceives traveling on this node is. Since subtracting values can have very strange or unwanted results sometimes, I did not want to initially start by having certain affinity and architectural value combinations removing cost from the node’s overall cost. To circumvent this, I added a “default architectural cost” to each node, that can be increased or reduced by the affinity/architectural value relationship (without lowering below 0).

Summarizing Architectural Cost from Architectural Value and Agent Affinity Relationship

So the overall concept is that every node has some cost used in the A* pathfinding. An additional cost, Architectural Cost, was created to account for the agent affinities and architectural values of the nodes. An architectural cost is added to every node, starting with the default cost, and either reducing it (to a possible minimum of 0) with very high affinity and very high architectural value, or raising it, with an arbitrary ceiling determined by the max possible affinity in the system and the max possible architectural value a node can have. It is more important to ensure that the cost never dips below 0 that restricting the upper limit.

Next Steps

I have already looked into putting these values together in a precise mathematical relationship which I will be looking to explore in my next blog post. I am looking to finally integrate this into the A* pathfinding system and test this on agent’s with a single architectural affinity type (with various values) and adding varied architectural values of that type to nodes throughout the A* grid and seeing if the results are in the direction I expect, and how processing intensive it is. We only need to test a single agent at a time currently, so it can be a rather expensive process if needed, but it still needs tested and gives me concern to add to the cost determination process.

Updating A* to Incorporate Various Architectural Elements Influencing Pathfinding

March 21, 2020

Updating A*

Different Agent Types and Different Architectural Elements

Adding Architectural Elements of Influence to A* Pathfinding

We want to be able to add various architectural elements to an environment and use them to influence the pathing of AI agents using A* pathfinding. Along with this, we want various agents to respond differently to the same architectural stimulus (i.e. some agents should like moving near windows more, while others should prefer to stay away from windows). This required the addition of some architectural data to both the agents and the nodes within the A* grid.

Implementing Architectural Elements

I have started to implement my approach outlined in my blog post from yesterday to create the foundation for this architectural pathing addition. I have started with just a single data point to work out the feature by adding a “window” value to both the agents and the nodes of the grid. The Agent window measures their affinity for window spaces (higher value means they are more likely to move near/towards windows) and the Node window values represents how much that space is influenced by windows (being closer to a window or near several windows will raise this value, and a higher value means it is more window influenced, and more appealing to high window affinity agents).

I modified the existing Influence class into an abstract base class that I will use as the basis for these architectural influence objects. This allows me to pass on traits consistent with all types of influence objects (such as dimensions for area of influence) as well as methods they will all need (such as one which determines how they apply influence to the area around them). Related to this, I was able to move the influence method out of the AGrid class, which also prepares the entire grid, so this was good for trimming that class down.

Moving the influence spreading methods to the Influence objects themselves was also crucial because it allows different objects to apply influence differently and more uniquely. Some objects may apply to simple rectangular shapes, where others may project out in a cone, or even use ray casts to determine their area of influence. To help these Influence objects influence the proper area, I pass them a reference to the grid as well as their starting position (in terms of nodes on the grid). This is helpful to start in the AGrid class, which currently holds a reference to all the Influence objects in the scene, as it uses their transform information to determine which node they are centered on (which is passed on to the Influence object itself as stated to know where it is located in the grid).

Finally, I had to make sure a reference to the individual agents was getting passed through the entire pathfinding process so it could use that information to provide the possibly unique path for the different types of agents. This just meant I needed to add references to the UnitSimple class to pass through as a parameter from UnitSimple itself, to the PathRequestManagerSimple, and eventually to the PathfindingHeapSimple, which finally uses that data to modify how it calculates cost for creating the paths.

Investigating How to Apply Architectural Influence as Cost

Figuring out how to apply this combination of architectural value within the nodes themselves and the architectural affinities of the agent in a mathematical sense that makes sense has proven difficult, and I am still investigating a clean way to implement this. Because of the nature of how the costs work with this pathfinding system, subtracting values from costs in a way that is not very controlled can be risky and prone to breaking, as negative values can provide some very strange results.

To help determine a system to implement this, I broke it down as simply as I could. The goal is to use the two values, agent affinity and node architectural value, together to produce a architectural cost to add on top of the normal distance cost of a node. Looking at this, I created a set of constraints to guide the process, and eventually a basic system to follow for now to give results at least in the proper realm of what we are looking for.

Architectural Cost Constraint Breakdown

Early on I thought it would be helpful to have a MAX affinity value possible and a MAX architectural value possible since I thought I would either need to subtract from these values (so bigger numbers led to lower additional cost) or divide with these numbers (to provide some proportionality value within the cost calculation). These arbitrary values and their concept were then used in coming up with some of the initial constraints I delivered. These constraints were (the results are the architectural cost, added to total node cost):

- MAX Affinity with MAX Architectural Value = 0

- MIN Affinity with MAX Architectural Value = MAX Architectural Cost

- Average Affinity with ANY Architectural Value = DEFAULT

- ANY Affinity with 0 Architectural Value = DEFAULT

The ideas behind these constraints were: having the highest affinity on the most architectural value possible on a node should produce the lowest architectural cost possible (0 in this case), the lowest affinity (or greatest aversion) with the highest architectural value on a node should produce the highest architectural cost possible, having an average affinity means the agent is neatural towards that architectural element so no value has any major influence on the cost, and finally if a node has 0 architectural value for a feature the agent takes on no cost regardless of their affinity towards it since it is not present in any way for that node.

Further Breakdown of Interaction Between Agent Affinity and Architectural Node Value

While a step in the right direction, I still was not sure exactly how I wanted to numerically determine the architectural cost. To further aid myself, I settled on a system that made a decent amount of sense with how I wanted these factors to interact. The tricky part is that it is the interaction between these two factors that influences the resulting cost on the node, it is not simply more of one or more of the other reduces cost on their own.

They concept I settled on was that the agent’s affinity value would be used to calculate a cost/architectural value factor (using basic factor labeling, multiplying this by architectural value would result in some cost value). I decided having a MAX affinity value possible made sense, and could help with this approach. Affinities below the average (value in the middle) of the MIN and MAX affinities would increase the cost of the node, where values above the average would reduce the cost of the node. The architectural value would then just determine how much this rate influenced the cost of the node. This correctly hit the points that agents with very low affinities should really want to avoid nodes with a lot of that architectural element, and those with very high affinities should really prefer nodes with that architectural element.

I feel like at this point I have most of the constraining factors and rules in place, I just need to bring them all together in a relatively clean calculation. My first attempt does not need to be the perfect solution, so I can modify it later if certain mathematical approaches work better for this situation, I just need to make sure it encompasses the general ideas and goals we need from the system for now.

Next Steps

I mostly just need to bring everything together to get a calculation that satisfies our basic rules and goals for now. As stated, I can modify it if better mathematical approaches are found, but we just need something to test for now. I am investigating basic math formulas to see which curves look like they would fit the preferred results, so I will at least record those for now to test in the future (just simple squared, cubed, root graphs). Finally, I just need to fully implement the system so I can test the bare basics to make sure the different agents actually travel differently with the window objects in the world and that they are not reducing the processing time to a crawl.

Updating A* for Multiple Agent Types of Pathfinding

March 20, 2020

Updating A*

Different Pathfinding Logic for Different Agent Types

A* Current System

The A* Pathfinding currently performs the same calculations for every agent in order to determine its path. Every unit then takes the same path assuming they have the same starting and target positions. We are looking to adjust this so there are various types of agents which with different affinities to certain objects in the environment. This then needs to be incorporated in the path determination so different types of agents will takes different paths, even when using the same starting position and same target position.

Differentiating Pathfinding for Different Types of Agents

We want to add more parameters to the grid nodes besides flat cost that influence the agents’ paths. These parameters also need to be able to influence different agents’ paths differently (i.e. the parameters may give a node a cost of 10 for one agent, but 20 for another).

Approach

The initial needs for this system are the addition of parameters to the nodes of the A* grid and parameters that correlate with these on the agents themselves. With this in place, we are just going to add a reference pass of the individual agents themselves to the eventual path calculation so it can also factor in these additional values.

The actual steps for this concept are laid out here:

– Individual agents need to have data within them that determines how they act

– Pass the UnitSimple instance itself through PathRequestManagerSimple.RequestPath along with the current information (its position, its target position, and the OnPathFound method)

– In PathRequestManagerSimple script, add UnitSimple variable to the PathRequest struct

– Now, RequestPath with added UnitSimple parameter can also pass its UnitSimple reference to its newly created PathRequest object

– Then, TryProcessNext method is run

– Here, the UnitSimple reference can be passed on to PathfindingHeapSimple through the pathfinding.StartFindPath() method call

– Add UnitSimple parameter to StartFindPath method in PathfindingHeapSimple class along with existing parameter (start position of path request, target position of path request)

– PathfindingHeapSimple can now use variables and factors of UnitSimple when determining and calculating the path for that specific agent

– Finally, returns its determined path as a set of Vector3 waypoints to the agent (effectively stays the same process)

Next Steps

This is just a conceptual plan, so I still need to implement it to see if it works and how it runs. I will also be looking to add some parameters to the nodes and agents, so I can start differentiating various types of agents to test the system.

UnityLearn – AI For Beginners – Finite State Machines – Pt.02 – Finite State Machine Challenge

April 6, 2020

AI For Beginners

Finite State Machines

Finite State Machines

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Finite State Machine Challenge

This tutorial provided a challenge to complete and then provided a solution. The challenge was to create a state where the npc would retreat to an object tagged as “Safe” when the player approached the NPC closely from behind.

My Approach

Since they had a State enum named Sleep already that we had not worked with yet, I used that as the name of this new state (I started with Retreat, but then found the extra Sleep enum so I changed to that since I assumed it would be more consistent with the tutorial). Similar to the CanSeePlayer bool method added to the base State class for detecting when the player is in front of the NPC, I added an IsFlanked bool method here that worked similarly, but just detected if the player was very close behind instead of in front. I used this check in the Patrol and Idle state Update methods to determine if the agent should be sent into the new Sleep state.

In the Sleep state itself I used similar logic from the Pursue state for the constructor, so I set the speed to a higher value and isStopped to false so the NPC would start quickly moving to the safe location. In the Enter stage I found the GameObject with tag “Safe” (since this was set in the conditions for the challenge to begin with) and used SetDestination with that object’s transform.position.

The Update stage simply checked if the npc got close to the safe object with a continuous magnitude vector check, and once it got close enough, it would set the nextState to Idle before exiting (since Idle quickly goes back to Patrol in this tutorial anyway, this is the only option really needed).

Finally, the Exit stage just performs ResetTrigger for isRunning to reset the animator and moves on to the next State (which is only Idle as an option at this time).

Their Approach:

Most of what they did was very similar, although they did make a new State named RunAway instead of the extra Sleep State, so I could have stuck with Retreat and been fine.

Notable differences were that they checked if the player was behind them by changing the order of subtraction when performing the direction check (changed player – npc to npc – player) where I just had the angle check use the negative forward vector of the npc instead of the positive vector. These give effectively the same results, but I liked my approach better since it matched up with what was actually being checked better.

They also set the safe gameObject immediately in the constructor, where I was setting this in the Enter stage of the State. Again, this basically gives the same results in most cases, but I think their approach was better here just because the sooner you perform and set that FindGameObjectWithTag the better I think just to make sure it has access when it does need it.

Finally, for their distance check to see if they had arrived at the safe zone, they used a native NavMeshAgent value, remainingDistance. I used the standard distance check of subtracting the vectors and checking the magnitude, so these again both give similar results. Mine is more explicit in how it is checking, and the NavMeshAgent value is just cleaner, so these both had pros and cons.

Summary

This was a nice challenge just to work with a simple existing finite state machine. Similar to what they mentioned in the tutorial, I think setting the safe object in the static GameEnvironment script and just pulling from that (instead of using FindGameObjectWithTag every time the NPC enters the Sleep/RunAway State) would be much more efficient. Also just to help with checking states and debugging, I added a Debug.Log for the base State Enter stage method that just returned the name of the current State as soon as it was entered each time. This let me know which State was entered immediately when it was entered, so this also helped show me when the states were entered, so this was a very nice state machine check that only required a single line of code.

UnityLearn – AI For Beginners – Finite State Machines – Pt.01 – Finite State Machines

April 1, 2020

AI For Beginners

Finite State Machines

Finite State Machines

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Finite State Machines

Finite State Machine (FSM): conceptual machine that can be in exactly one of any number of states at any given time. Represented by a graph where nodes are the states, and the paths between them are the transitions from one state to another. An NPC will stay in one state until a condition is met which changes it to another state.

Each state has 3 core methods: Enter, Update, Exit

- Enter: runs as soon as the state is transitioned to

- Update: is the continuous logic run while in this state

- Exit: run at the moment before the NPC moves on to the next state

State Machine Template Code (Can use core of this for each individual state):public class State{ public enum STATE { IDLE, PATROL, PURSUE, ATTACK, SLEEP }; public enum EVENT { ENTER, UPDATE, EXIT }; public STATE name; protected EVENT stage; public STATE() { stage = EVENT.ENTER; } public virtual void Enter() { stage = EVENT.UPDATE; } public virtual void Update() { stage = EVENT.UPDATE; } public virtual void Exit() { stage = EVENT.EXIT; } public State Process() { if (stage == EVENT.ENTER) Enter(); if (stage == EVENT.UPDATE) Update(); if (stage == EVENT.EXIT) { Exit(); return nextState; } return this; }}

Creating and Using A State Class

State class template (similar but slightly different from last tutorial, with some comments):

public class State{ public enum STATE { IDLE, PATROL, PURSUE, ATTACK, SLEEP }; public enum EVENT { ENTER, UPDATE, EXIT }; // Core state identifiers public STATE name; protected EVENT stage; // Data to set for each NPC protected GameObject npc; protected Animator anim; protected Transform player; protected State nextState; protected NavMeshAgent agent; // Parameters for NPC utilizing states float visionDistance = 10.0f; float visionAngle = 30.0f; float shootDistance = 7.0f; public State(GameObject _npc, NavMeshAgent _agent, Animator _anim, Transform _player) { npc = _npc; agent = _agent; anim = _anim; stage = EVENT.ENTER; player = _player; } public virtual void Enter() { stage = EVENT.UPDATE; } public virtual void Update() { stage = EVENT.UPDATE; } public virtual void Exit() { stage = EVENT.EXIT; } public State Process() { if (stage == EVENT.ENTER) Enter(); if (stage == EVENT.UPDATE) Update(); if(stage == EVENT.EXIT) { Exit(); return nextState; } return this; }}

Notice that the public virtual methods for the various stages of a state look a bit awkward. Both Enter and Update set the stage to EVENT.UPDATE because you want them to be setting stage equal to the next process called, and both of them would look to move that to Update. After entering, you move to Update, and each Update wants to move to Update again until it is told to do something else to Exit.

They also started to make actual State scripts, which create new classes that inherit from this base State class. The first example was an Idle state with little going on to get a base understanding. Each of the stage methods (Enter, Update, Exit) used the base versions of the methods from the base class as well as their own unique logic particular to that state. Adding the base methods within just ensured the next stage is set properly and uniformly.

Patrolling the Perimeter

This tutorial adds the next State for the State Machine, Patrol. This gets the NPC moving around the waypoints designated in the scene using a NavMesh.

They then create the AI class, which is the foundational logic for the NPC that will actually be utilizing the states. This is a fairly simple script in that the Update simply runs the line:currentState = currentState.Process();

This handles properly setting the next State with each Update, as well as deciding which state to use and which stage of that state to run.

It turns out running the base Update method at the end of all the individual State classes’ Update methods was overwriting their ability to set the stage to Exit, so they could never leave the Update stage. They fixed this by simply removing those base method calls.

Summary

Using Finite State Machines is a clean and organized way to give NPCs various types of behaviors. It keeps the code clean by organizing each state of behaviors into its own class and using a central manager AI for each NPC to move between the states. This also helps ensure an NPC is only in a single state at any given time, to reduce errors and debugging.

This setup is similar to other Finite State Machine implementations I have run into in Unity. The Enter, Update, and Exit methods are core in any basic implementation.

UnityLearn – AI For Beginners – Navigation Meshes – Pt.02 – Navigation Meshes

March 30, 2020

AI For Beginners

Navigation Meshes

Navigation Meshes

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Navigation Mesh Introduction

Nav Mesh: Unity function for making a navigable area for agents to traverse over.

There are 4 main tabs in the Navigation window:

- Agents

- Area

- Bake

- Object

Agents

Core Parameters: Radius, Height, Step Height, Max Slope

These dimensions determine where the agent can fit when navigating around obstacles, as well as how it can traverse elevation differences. Radius and height help limit where a character can go (cannot go between very small gaps or under objects very close to the ground) where step height is the elevation difference it can traverse in a single jump and max slope is how inclined the surface can be for the agent to travel on. The name allows you to save several types of agents, with various values for these 4 core parameters.

Area

How you define different costs for different types of areas (used in A* pathing).

Bake

Creates the Nav Mesh over your given series of meshes using their polygons. It does this using a template agent called the baked agent size. By default there is only a single mesh created for the default agent type, but there are additional tools that can allow you to create multiple meshes to help handle various sized/types of agents. The Generated Off Mesh Links parameters determine how far off the mesh an agent should be able to jump or drop to get to another mesh location.

Advanced Options (Under Bake):

- Manual Voxel Size: lets you determine the voxel size used to generate the Nav Mesh; larger voxels are less detailed and follow the mesh less accurately; default is 3.00 voxels per agent radius; generally aiming for a value between 2 and 4

- Min Region Area: Helps remove areas that are deemed navigable, but are too small to really be of use or will cause issues by existing

- Height Mesh: bool to determine if Nav Mesh should average elevations to turn steps into slopes or not

Object

Where you can align specific area types to different parts of the mesh, which parts of your mesh you want to generate nav meshes for, and helps create mesh links, which help travel from one nav mesh to another by jumping or falling.

From Waypoints to NavMesh

Introduction of Unity NavMesh, where they introduce the concept of baking the Nav Mesh with use of static game objects and adding the NavMeshAgent component to the AI agents you want to follow the Nav Mesh.

NavMeshAgents

They setup a new scene and project with a Nav Mesh. Nav Mesh specifically looks for “Navigation Static” game objects to build around when baking. They showed that selecting a gameObject before going into play mode with the Nav Mesh active helped visualize how the Nav Mesh was determining their paths.

Areas and Costs

The Areas tab in the Navigation Window lets you set different parts of the mesh as different types of areas. When selecting the polygons or meshes to use for these different areas, select the desired polygons and go to the Object tab. Here you can select which Area to apply to those specific polygons. These areas can then be used to tell agents where they can and cannot go. This is done by going to the NavMeshAgent component of the agent and changing the Area Mask of the Path Finding for that agent. By turning an Area type on or off, you designate which meshes it can travel on at all.

In the Areas tab, you can assign different Cost values to these different areas. This can make them more or less appealing for the agents to traverse across (higher values for cost mean the agents are less likely to use that path).

Following a Player on a NavMesh and Setting Up Off-Mesh Links

This tutorial setup another scene in another project, this time with the basis of following the player around. This was as simple as using the SetDestination method within the NavMeshAgent component to the player’s position in Update.

Off Mesh Links

Off Mesh Links: used if you have gaps in your NavMesh that you want an agent to cross

To create the Mesh Links, select the polygons of interest that you want to link over a gap and go to the Object tab. Here you can select “Generate Mesh Links”. Then go to the Bake tab, set the “Drop Height” and/or “Jump Distance” under the “Generated Off Mesh Links” section, and bake the mesh again to incorporate these links. They will be visually shown as circles between the meshes. Jump Distance is good for crossing horizontal disconnects, where Drop Height is good for crossing significant vertical disconnects. Setting drop links is similar, in that you select the two main groups of meshes in question and generate the mesh links.

Summary

After doing a lot of work with A*, building a path finding system with it from scratch, a lot of the tuning factors and parameters for Nav Mesh made a lot of sense with how A* works. This was good to know there was so much overlap, so I can learn a lot from what they use to work with Nav Mesh to give my A* system effective modifiable parameters to tweak it for designing needs.

Nav Mesh does seem powerful and very easy to get running, but I would have to work with it more to see just how controllable it is. I still like having my own A* system that I know the insides well to tweak exactly how I want it, but the Nav Mesh does offer a lot of similar features I am looking to add so I may need to explore it more to see how easy it is to work with.

A* Architecture Project – Spawning Agents and Area of Influence Objects

March 25, 2020

Updating A*

Spawn and Area of Influence Objects

Spawning Agents

Goal: Ability to spawn agents in that would be able to use the A* grid for pathing. Should have options to spawn in different locations and all use grid properly.

This was rather straight forward to implement, but I did run into a completely unrelated issue. I created an AgentSpawnManager class which simply holds a prefab reference for the agents to spawn, a transform for the target to pass on to the agents, and an array of possibl spawn points (for incorporating the option of several spawn locations). This class creates new gameObjects based on the prefab reference, and then sets their target to that determined by the spawner. This was something worth tracking since sometimes there can be issues with Awake and Start methods when setting values after instantiation.

This was all simple enough, but the agents were spawning without moving at all. It turns out the issue was that the spawn location was above a surface that was above an obstacle (the obstacle was below the terrain, but entirely obstructed). This was an issue with how my ray detection and node grid was setup.

Editing the Grid Creation Raycast

The node and grid creation for the A* system uses a raycast to detect obstacles, as well as types of terrain to inform the nodes of their costs or if they are usable at all. Since it is very common to use large scale planes or surfaces as general terrain, and place obstacles on this, uses a full ray check would almost always pick up walkable terrain, even if it hit an obstacle as well.

To get around this, I simply had it check for obstacles first, and if it detected one, mark this node unwalkable and move on. This created an issue however in the reverse case, if an obstacle went a bit past the terrain into other nodes below the surface, they would be picked up as false obstacles, or in this case, it was picking up obstacles that were entirely located below the surface.

I was using a distance raycast in Unity, which just checks for everything over a set distance. I looked into switching this to a system that just detects the first collider the ray hits and simply use that information. I found that using the hit information of the raycast does this.

Unfortunately I am using a layer setup for walkable and unwalkable (obstacle) terrain, so I needed to incorporate that into my hit check. Checking layers is just weird and unreadable in Unity scripting, since I am currently just use the hardcoded integer value for the current layer number that is unwalkable when doing my check for obstacles. This does at least suffice to let the system work properly for now.

Influence Area with Objects

I wanted to be able to create objects which could influence the overall cost of nodes around them in an area significantly larger than the objects themselves. The idea is that a small but visible or detectable objects could influence the appeal of nodes around them to draw or push agents away from them.

For a base test, I created a simple class called Influence to place on these objects. The first value they needed was an influence int to determine how much to alter the cost of the nodes they reached with their influence. Then, to determine the influence range, I gave them an int each for the x and z direction to create dimensions for a rectangle of influence in units of nodes. I also added some get only variables to help calculate values from x and z to help center these influence areas in the future.

I then added an Influence array to the AGrid class which contains all the logic on initializing the grid of nodes and setting their values at start up. After setting up the grid, it goes through this array of Influence objects and uses their center transform positions to determine what node they are centered on, then finds all the nodes around it according to the x and z dimensions given to that influence object, and modifies their cost values with the influence value of the Influence object. Everything worked pretty nicely for this.

As a final touch just to help with visualization, I added a DrawGizmos method that draws yellow wire cubes around the influence objects to match their area of influence. Since the dimensions are mainly in node units, but the draw wire cube wants true Unity units, I simply multiplied the x and z node dimensions for the influence by the nodeDiamater (which is the real world size of each node) to convert it to units that made sense.

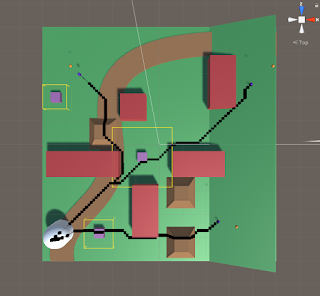

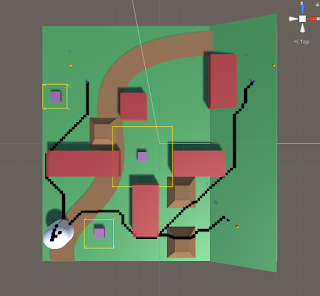

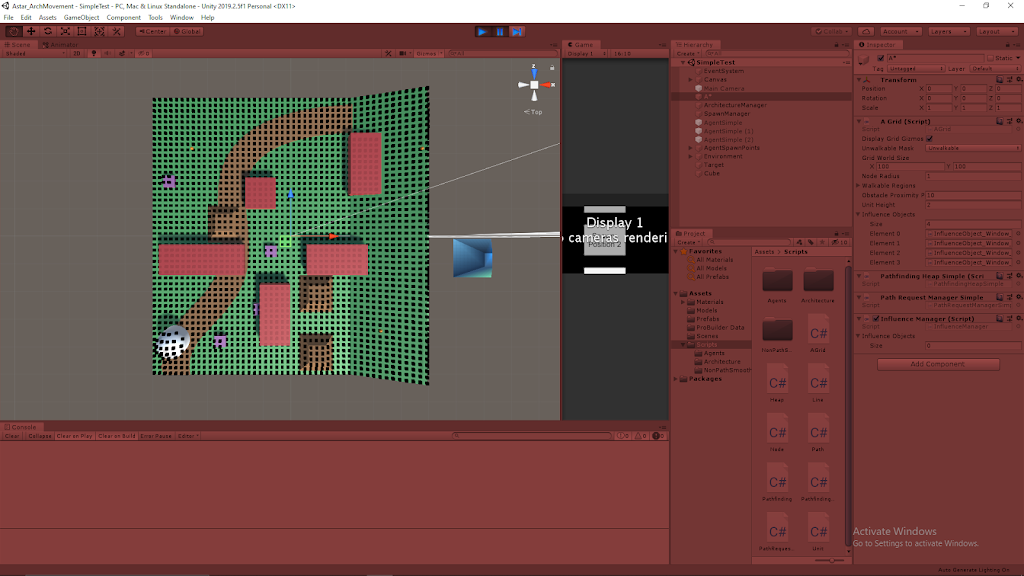

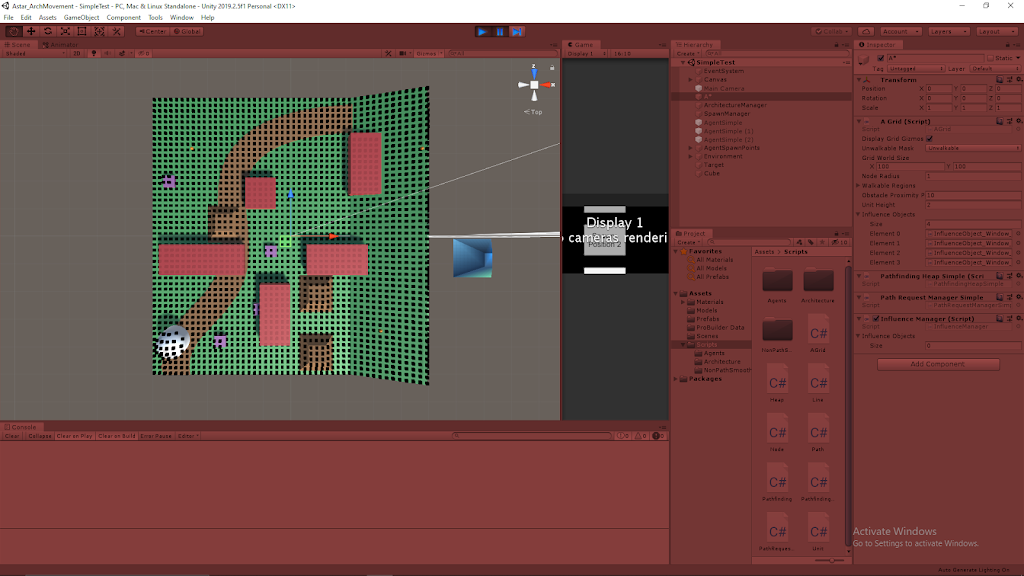

I have two sample images to show the influence objects in effect below. The small purple squares are the influence objects, and the yellow wire frame cubes around them show their estimated area of influence. The first image shows the paths of the agents when the influence of all squares is set to 0 (no influence), and the second shows the paths when the influence is set to 200 (makes nodes around them much more costly and less appealing to travel over).

Summary

The raycast and layer system for detecting the terrain and initializing the grid could use some work to perform more cleanly and safely, especially for future updates. The spawning seems to have no issues at all, so that should be good to work with and edit moving forward. The basic implementation of the influence objects has been promising so far, I will look into using that as a higher level parent class or an interface moving forward as this will be a large part of the project and their may be many objects that use this same core logic by want special twists on it (such as different area of influence shapes, or various calculations for how influence should be applied).

UnityLearn – AI For Beginners – Waypoints and Graphs – Pt.01 – Graph Theory

March 24, 2020

AI For Beginners

Graph Theory

Graph Theory

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Graph Theory

Intro

The AI for Beginners course starts very basic so I have not covered a lot up until now. It handles the basics of moving an object with simple scripting, as well as guiding and aiming that movement a bit. This section starts to get into some more interesting theory and background for AI.

Graphs are simply collections of nodes and edges. Nodes are locations or points of data, and edges are the paths connecting them (which also contain significant data themselves). There are two directional types for edges within these graphs: directed and undirected. Directed paths only allow for movement between two nodes in a single direction, while undirected paths allow for movement either direction between nodes.

Graphs are used in any case to move between states. These states can be real states or conceptual states, so the nodes and edges between them can possibly be much more theoretical then actual objects or locations.

Utility Value: This is the value for an edge. Some examples shown were time, distance, effort, and cost. These are values that help an NPC make a decision to move from one node to the next over said edge.

Basic High Level Algorithms for Searching Nodes

Breadth-First Search

Marks original node as 1, then all adjacent nodes to that as 2, then all adjacent nodes to all of those as 3, etc. until it reaches the destination node. It then counts backwards to determine the path. Examines all possible nodes in graph to find the best path. This makes it effective but expensive and time consuming.

Depth-First Search

Starts at NPC position, then finds one adjacent node and numbers it, then it finds another single adjacent node and numbers it. This continues until it finds a dead end, in which case it returns to the last node where there was another direction to try and heads off in a different direction with the same method.

More Advanced General High Level Algorithm

A* Algorithm

All the nodes are numbered. It creates an open list and closed list, which keep track of nodes visited. There are three main cost values associated with the edges in this case: Heuristic cost (H cost), Movement cost (G cost), and the F cost.Heuristic Cost (H Cost): estimated cost of getting to the destination from that specific node (this value is generally distance related)Movement Cost (G cost): utility cost of moving from one node to another nodeF Cost: sum of the H cost and G cost which determines the total value of that nodeEach node stores these cost values as well as its parent, which is the closest node that continues the proper path. Once the final node is reached, the nodes follow this chain of parent nodes to determine which nodes make up the path to travel.

Summary

This was a very simplified approach to graph theory that was at the very least helpful as a small refresher on how A* worked. I also learned that Unity’s NavMesh uses the A* algorithm at its foundation. This does give me a good starting point with some subjects and terminology to investigate to understand some theory behind AI however, with graph theory, the nodes and edges, and the basic search methods.