January 11, 2021

Raycast for A* Nodes

Architecture AI Project

Original Downward Raycast and Downfalls

The raycasting system for detecting the environment to setup the base A* pathing nodes was originally using downward raycasts. These rays traveled until they hit the environment, and then set the position of the node as well as whether it is walkable or not. An extra sphere collision check around the point of contact was also conducted so as to check for obstacles right next to the node as well.

This works for rather open environments, but this had a major downside for our particular needs as it failed to detect openings with in walls and primarily doors. Doors are almost always found within a wall, so the raycast system would hit the wall above the door and read as unwalkable. This would leave most doors as unwalkable areas because of the walls above and around them.

Upward Raycast System Approach

Method

The idea of using an upward raycast was that it would help alleviate this issue of making openings within walls unwalkable when they should be walkable. By firing the rays upward, the first object hit in most cases can safely be assumed to be the floor because our system only works with a single level at this time. Upon hitting the first walkable target (in this case, the assumed floor), this point is set and another raycast is fired upward until it hits an unwalkable target. If no target is hit, this is determined as walkable (as there is clearly nothing unwalkable blocking the way), but if a target is hit, the distance between these two contact points is calculated. This distance is then compared with a constant height check and if the distance is greater, the node is still marked as walkable even though it eventually hit an unwalkable object.

This approach attempts to measure the available space between the floor and any unwalkable objects above it. If a wall is set directly onto the floor, as many are, the distance will be very small so it will not meet the conditions and will be set unwalkable appropriately. If there is a walkable door or just a large opening such as an arch, the distance between the floor and the wall above the door or the example arch way should be large enough that the system notes this area is still walkable.

Sphere Collision Check for Obstacles

Similarly to the original system, we still wanted a sphere collision check to help note obstacles very close to nodes so that the obstacles would not slip between the cracks of the rays cast, effectively becoming walkable. We included this similarly, but it is noted because the initial hit used is now below the floor, so the thickness of the floor must be accounted for. Currently a larger radius check is just needed so it can reach above and through the floor. In future edits, it could make sense to have a noted constant floor thickness and perform the sphere radius check above the initial raycast contact point according to this thickness.

Test Results

Details

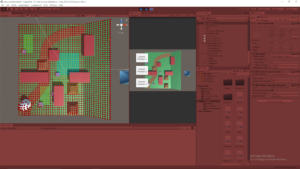

I compared the two raycast methods with a few areas in our current test project. In the following images, the nodes are visualized with Unity’s cube gizmos. The yellow nodes are “walkable” and the red nodes are “unwalkable”. Most of the large white objects are walls, and the highlighted light blue material objects are the doors being observed.

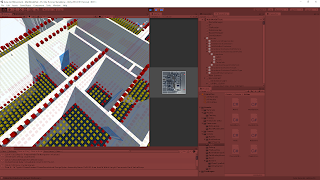

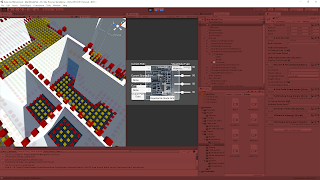

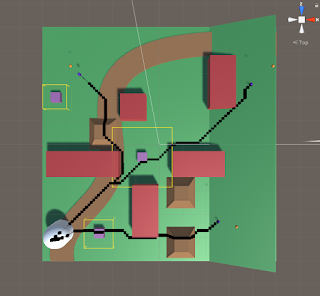

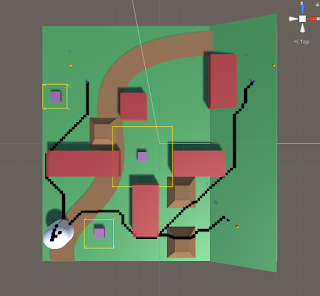

Test 1

A high density door area was chosen to observe the node results of both systems.

The downward check can work for doors when the ray does not directly hit a wall, as the sphere check properly checks them as walkable from the floor. However, the rays hitting the walls can be seen by the unwalkable nodes placed on top of them. A clear barrier is formed along the entirety of the walls, effectively making the doors unwalkable.

The upwards raycast test clearly shows every door has at least a single node width gap of walkable nodes in every case. The doors that were originally unwalkable with the downward raycast and now walkable as the height check was met.

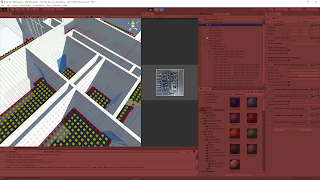

Test 2

A larger room with a single noteable door was observed.

The downward check does pose a problem here as a full line of unwalkable nodes can be seen on the floor blocking access through the door and into the room. Because the problem is seen with nodes on the floor rather than nodes on top of the wall, this is actually a case where the sphere collision check is the problem and not the raycast particularly. Changing the collision radius for the check could potentially solve the issue here.

The upwards raycast is able to cleanly present a walkable path through this door into the room. While this does give us the result we desired, it should be noted again this difference can be attributed to the difference in the sphere collision check for obstacles. The same radius was used for both tests, but the upwards raycast sphere orginates from the bottom of the floor, so the extra distance it has to travel through the floor is what really opens up this path.

Summary

The upwards raycast seems extremely promising in providing the results we wanted for openings, doors especially. The tests clearly demonstrate that it helps with a major issue case the downward check had, so it is at worst a significant upgrade to the original approach. The upward raycast with distance check method also succeeded in other door locations, supporting its consistency. It will still have trouble from time to time with very narrow openings, but should work in a majority of cases.

via Blogger http://stevelilleyschool.blogspot.com/2021/01/architecture-ai-pathing-project-upward.html