September 13, 2019

Unity Shader Graph

Intro Tutorial

Youtube – Basics of Shader Graph – Unity Tutorial

By: Brackeys

This tutorial introduces you to Unity’s shader graph, so it starts with the bare minimum and goes over some simple features to get you started. Messing with vertex colors got me back into shaders in Unity, and shader graphs provide a really simple way to get some interesting visual effects when I need a break from trying to figure out shader coding.

I already ran into a lot of snags that kept things from working initially. I missed the step at the beginning of the tutorial where they change the Unity project template to Lightweight RP as they’re creating the project. I was able to get around this by doing what they did in their vertex color tutorial, where I created a Lightweight Renderer Pipeline Asset and dragged that into the Graphics tab of the Project Settings.

I then found out that Unity does not like it when you change the name of a shader. I expected this as a possible issue since scripts need to have some of the coding changed to have the class name match the file name, so this seemed like it could be a similar problem. Since the material/shader still was not working, I simply deleted the shader and made a new one with the proper name and everything was finally displaying as a non-error material.

The general effect they were going for was a glowing effect, so they started with using a Fresnel Effect node in the shader graph. This exactly produces a bit of a glowing effect, with a power value that controls how glowy the material is. We then explored applying colors to node effects like this one. This is done by creating a color node, and multiplying it together with the node you intend to color.

There is an interesting feature in the shader graph that actually lets you open the code and see what it is actually doing behind the scenes. This could be useful for exploring the actual shader code behind the vertex color node I was interested in checking out.

Initially, everything is only controllable within the shader graph, with no way to edit anything directly in the Unity Inspector. You can change this however by right clicking in a node and selecting “Convert to Property”. You can still edit it within the shader graph, but it is now found in the Black Board. This does also show the property in your material so you can have different colored/effected objects using the same base shader graph object.

Finally, they just quickly show that the shader graph can accept and apply textures as well. They just do a simple occlusion example to show this off.

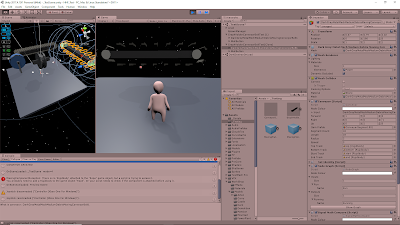

My Final Example Following Tutorial

Model: Free Asset from Polygonal Series by Meshtint

PROBLEMS

The shader graph was a bit finicky for me and had weird issues. Some of the previews within the shader graph did not appear for me. I tried ] changing up the graph and opening/closing the shader but they remained gone. I will have to try making a project with a Lightweight RP template from the initial setup to see if that changes any settings that impact this.

Once I promoted the color node to a property, it was hard to actually control the final color on the actual object. Sometimes I would change the color in the Blackboard and it would be reflected on the object, then sometimes it wouldn’t. Then editing the color within the created property on the material would only sometimes change the color. I have not found exactly when it passes the reference from being editable in the shader graph to when it is editable in the material (to sometimes not editable at all), but reapplying the material did seem to fix these issues sometimes.

NEXT STEP

More shader graph tutorials would be nice to check out just to get a basic grasp of how to use it to get some nice effects pretty quickly, although I’d still like to look into the coding of shaders as well. I would also be interested in looking how to tie them together, and control some of the parameters of the shader graph through script.