June 10, 2020

AI For Beginners

Goal Orientated Action Planning (GOAP)

Parts 1, 2, and 3

Beginner Programming: Unity Game Dev Courses

Unity Learn Course – AI For Beginners

Intro

This course covers goal orientated action planning (GOAP) as another way to set up flexible systems of AI behavior. This blog covers the first 3 tutorials of the course which introduce the general GOAP concept by defining its parts and explaining the planning process going in to creating a GOAP system.

An Introduction to GOAP

GOAP Introduction

- Goal Orientated Action Planning (GOAP):

- has all the elements of a finite state machine, but uses them differently

- uses graphs for processing

- GOAPs actions and goals are decoupled

- actions are free elements in the system that are mixed/matched to meet goals

- instead of having a list of actions needed to meet a goal, GOAP allows for multiple solutions to be chosen from

Actions

Components: Precondition, EffectPrecondition: state that must be met before the action can take placeEffect: how the action leaves the state of the agent (or world) after the action has occurredActions are connected similarly to dominoes where the effects of actions are matched with the preconditions of other actions to create action chains.

Goal: the end state of the agent

Creating a plan comes from understanding the current state of the agent itself and the world around it. The planning stage of GOAP works backwards to see if it is achievable from the currents states (again, of the agent and the world it understands). Since this approach can lead to multiple action chains that lead to the same goal, costs are implemented to help the agent decided which action chain to choose (and in the case of a tie, one can be selected at random).

A general GOAP system follows this structure where actions, goals, and the world state data are fed into a planner. The planner chains actions together according to the goals and starting states to see which plans are achievable. The planner uses the A* algorithm (exactly the same as some pathfinding algorithms) to determine the “best plan”. Once a plan is generated, the agent goes to achieve it with a simple state machine. This state machine looks to simply move the agent where it needs to be to perform the actions necessary, and perform said actions until a goal is achieved. Before each action is performed, it is checked to see if it is valid. If it is not valid anymore, the entire plan is abandoned, and a new plan is generated.

One of the biggest benefits of the GOAP approach is that new actions can continually be added to the pool of available actions for the agent. These actions will then always be picked up by the planner as another possible action to create an action chain or plan to solve a goal. The planner effectively creates the total graphs of actions for the designer, so it is also just easier to program additional factors as complete graphs do not need to be directly programmed as is more common with complete finite state machines.

Setting Up A GOAP Environment

Setting Up A GOAP Environment

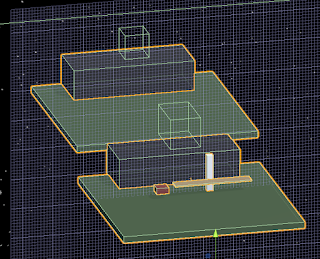

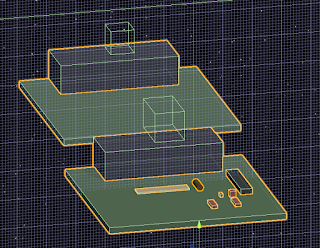

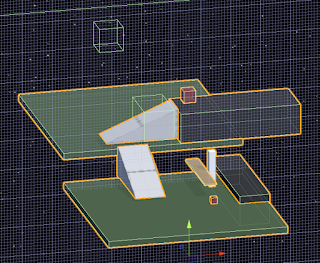

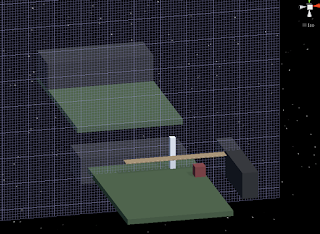

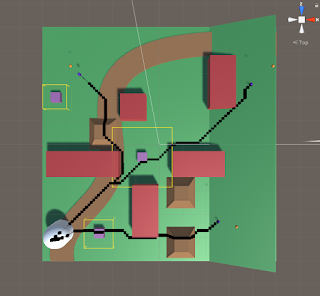

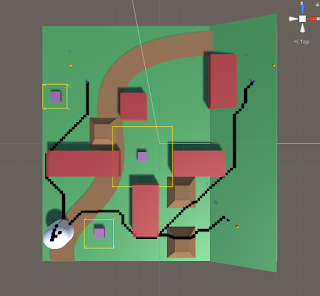

This first major tutorials starts the setup for a basic GOAP project. It is set in a small hospital with patient agents and nurse agents.

Patient Agents

They come into the hospital and get registered at the recption desk. They then move to the waiting room until it is their time to be received by the hospital where they are then brought to a cubicle to be checked on before leaving the hospital.

Nurse Agents

They operate the reception desk receiving incoming patient agents, they come and grab patients from the waiting room to take them to a cubicle to check on them, and they can also rest in the staffing area if they need a break.

Since a lot of the actions and goals of these two agents are heavily location based, they implemented an underlying waypoint system to the entire setup. Empty gameobjects are set at all the critical locations to be used as waypoints for guiding the agents moving forward. All the waypoints have been tagged with individual unique tags to help the agents locate them immediately upon instantiation.

Pre-Planning the Agent Actions

Pre-Planning the Agent Actions

The foundation of GOAP is that there are: goals, actions, and states. These must all be taken into consideration when planning those which to include and which should be able to lead into others when graphing out plans. An agent has a list of goals it can achieve. It also has a pool of actions it can use to achieve these goals. The actions may also require other actions before being able to be performed. Some actions may also require other agents or some other outside resource.

Patient Actions:

- Come into hospital

- Register at the front desk

- Go to waiting room

- Get treated when nurse and cubical are available

- Go home

Nurse Actions:

- Get Patient

- Go to Cubicle

- Rest

The agent must draw information from the world states to help determine what goals are achievable. It will also combine this information with its own state (data it has within itself) to help it determine what is possible. This world state information and the agent state information is passed into the planner, along with all the goal and action options of the agent, so the planner can generate a plan for the agent.

Summary

Overall this felt like a good introduction to the GOAP AI system approach. While I don’t think I could build my own systems quite yet using it, I have a much better understanding of the overall concept and how it can be used to create a flexible set of options for actions for AI’s to use to guide their behavior. The ability to add actions to this pool with relatively little cost is very nice for creating a scalable system with a lot of variability. I could also see this leading to a lot of interesting emergent behavior with large pools of agents, goals, and actions. I look forward to the next parts of the course where they get more into how to program such a system.