March 25, 2020

Updating A*

Spawn and Area of Influence Objects

Spawning Agents

Goal: Ability to spawn agents in that would be able to use the A* grid for pathing. Should have options to spawn in different locations and all use grid properly.

This was rather straight forward to implement, but I did run into a completely unrelated issue. I created an AgentSpawnManager class which simply holds a prefab reference for the agents to spawn, a transform for the target to pass on to the agents, and an array of possibl spawn points (for incorporating the option of several spawn locations). This class creates new gameObjects based on the prefab reference, and then sets their target to that determined by the spawner. This was something worth tracking since sometimes there can be issues with Awake and Start methods when setting values after instantiation.

This was all simple enough, but the agents were spawning without moving at all. It turns out the issue was that the spawn location was above a surface that was above an obstacle (the obstacle was below the terrain, but entirely obstructed). This was an issue with how my ray detection and node grid was setup.

Editing the Grid Creation Raycast

The node and grid creation for the A* system uses a raycast to detect obstacles, as well as types of terrain to inform the nodes of their costs or if they are usable at all. Since it is very common to use large scale planes or surfaces as general terrain, and place obstacles on this, uses a full ray check would almost always pick up walkable terrain, even if it hit an obstacle as well.

To get around this, I simply had it check for obstacles first, and if it detected one, mark this node unwalkable and move on. This created an issue however in the reverse case, if an obstacle went a bit past the terrain into other nodes below the surface, they would be picked up as false obstacles, or in this case, it was picking up obstacles that were entirely located below the surface.

I was using a distance raycast in Unity, which just checks for everything over a set distance. I looked into switching this to a system that just detects the first collider the ray hits and simply use that information. I found that using the hit information of the raycast does this.

Unfortunately I am using a layer setup for walkable and unwalkable (obstacle) terrain, so I needed to incorporate that into my hit check. Checking layers is just weird and unreadable in Unity scripting, since I am currently just use the hardcoded integer value for the current layer number that is unwalkable when doing my check for obstacles. This does at least suffice to let the system work properly for now.

Influence Area with Objects

I wanted to be able to create objects which could influence the overall cost of nodes around them in an area significantly larger than the objects themselves. The idea is that a small but visible or detectable objects could influence the appeal of nodes around them to draw or push agents away from them.

For a base test, I created a simple class called Influence to place on these objects. The first value they needed was an influence int to determine how much to alter the cost of the nodes they reached with their influence. Then, to determine the influence range, I gave them an int each for the x and z direction to create dimensions for a rectangle of influence in units of nodes. I also added some get only variables to help calculate values from x and z to help center these influence areas in the future.

I then added an Influence array to the AGrid class which contains all the logic on initializing the grid of nodes and setting their values at start up. After setting up the grid, it goes through this array of Influence objects and uses their center transform positions to determine what node they are centered on, then finds all the nodes around it according to the x and z dimensions given to that influence object, and modifies their cost values with the influence value of the Influence object. Everything worked pretty nicely for this.

As a final touch just to help with visualization, I added a DrawGizmos method that draws yellow wire cubes around the influence objects to match their area of influence. Since the dimensions are mainly in node units, but the draw wire cube wants true Unity units, I simply multiplied the x and z node dimensions for the influence by the nodeDiamater (which is the real world size of each node) to convert it to units that made sense.

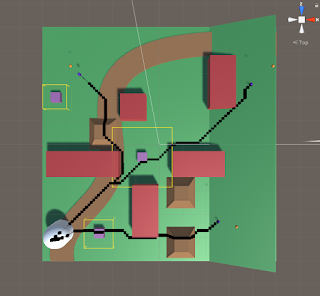

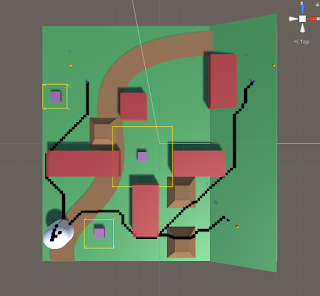

I have two sample images to show the influence objects in effect below. The small purple squares are the influence objects, and the yellow wire frame cubes around them show their estimated area of influence. The first image shows the paths of the agents when the influence of all squares is set to 0 (no influence), and the second shows the paths when the influence is set to 200 (makes nodes around them much more costly and less appealing to travel over).

Paths with Influence Set to 0

Paths with Influence Set to 200

Summary

The raycast and layer system for detecting the terrain and initializing the grid could use some work to perform more cleanly and safely, especially for future updates. The spawning seems to have no issues at all, so that should be good to work with and edit moving forward. The basic implementation of the influence objects has been promising so far, I will look into using that as a higher level parent class or an interface moving forward as this will be a large part of the project and their may be many objects that use this same core logic by want special twists on it (such as different area of influence shapes, or various calculations for how influence should be applied).