August 9, 2021

Shader Graph

Unity

Title:

ART 200: Creating an energy Shader in Shader Graph in Unity

By:

Casey Farina

Description:

Tutorial on creating an energy effect on a surface with Unity’s Shader Graph.

Introduction

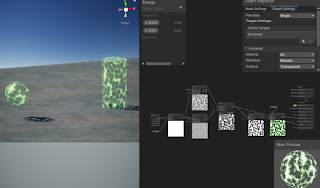

This tutorial covers the creation of a shader in Unity’s Shader Graph which covers the surface of an object with a glowy, plasma-like effect. Further in the tutorial they cover how to make the less pronounced parts of the effect actually completely transparent, which gives a cool effect on its own, or allows for this to be placed over other effects or surfaces to apply the effect with gaps showing the surface below.

Quick Notes

HDR Color and Emission

It starts with HDR color with significant intensity into Emission to create glowing, radiant effective.

Voronoi Noise in Layers

Use Voronoi noise to create moving globules effect within the energy. Contrast node used with this to create more distinct dots.

They then created two Voronoi noises with differet cell densities and blended them with a Blend node, giving a combination of tiny particles moving with some larger effects moving throughout the material.

Transparency and Alpha Clip Threshold

By making the Surface of the Graph Inspector Transparent, feeding the Blend result into the now present Alpha on the Fragment node, and setting a proper Alpha Clip Threshold (0.1 is usually a good start), then you can get an effect where parts of the energy shader are totally transparent and see through.

Summary

This tutorial helped me make a simple, yet effective energy effect shader that works decently well on multiple surfaces. The extra segment on including transparency into the effect really took it up a level for me as an interesting effect that could have a lot of uses. This would help expand the effect a bit by also giving it the option of being used on a larger mesh outside of an object’s actual mesh to provide a sort of aura off of and around an object that could be cool, as well as allowing for layering with other effects easier.

They also covered a small segment on using the Gradient node for some strange effects. This was a small segment that also didn’t appeal to me particularly so I didn’t try that portion out myself. That could give some more variety to the tool, but it just wasn’t something I wanted currently.

My result can be seen in action below with a few variations in speed of the effect and color!

via Blogger http://stevelilleyschool.blogspot.com/2021/08/unity-shader-graph-energy-shader-by.html