June 28, 2021

Borsuk-Ulam Theorem

3D Math

Title:

A surprising topological proof – Why you can always cut three objects in half with a single plane

By:

Zach Star

Description:

Proving that any set of n n-dimensional objects can be cut in half by n-1 dimensional object (i.e. 3 3D objects can be cut in half by a 2D plane).

Overview

This was just a fun and interesting mathematical proof I came across. Using the Borsuk-Ulam Theorem at its core, it proves how any set of 3 3D objects can be cut in half (by volume) by some 2D plane. It then expands that to show that this works for any n amount of n-dimensional objects, which are then divided by an object with n-1 dimensions.

Borsuk-Ulam Theorem

This can be read up on here at the corresponding wikipedia page:

Wikipedia – Borsuk-Ulam Theorem

This states: “every continuous function from an n-sphere into Euclidean n-space maps some pair of antipodal points to the same point”. The simple explanations they use to show how it is used is that in the 2D case, there must exist at least 2 points around the equator with the same temperature, and in the 3D case, there must exist at least 2 points in the entirety of Earth that have the same temperature and pressure. Another key note is this is only true assuming all parameters vary continuously in space.

Expanding to Proof on Dividing Objects

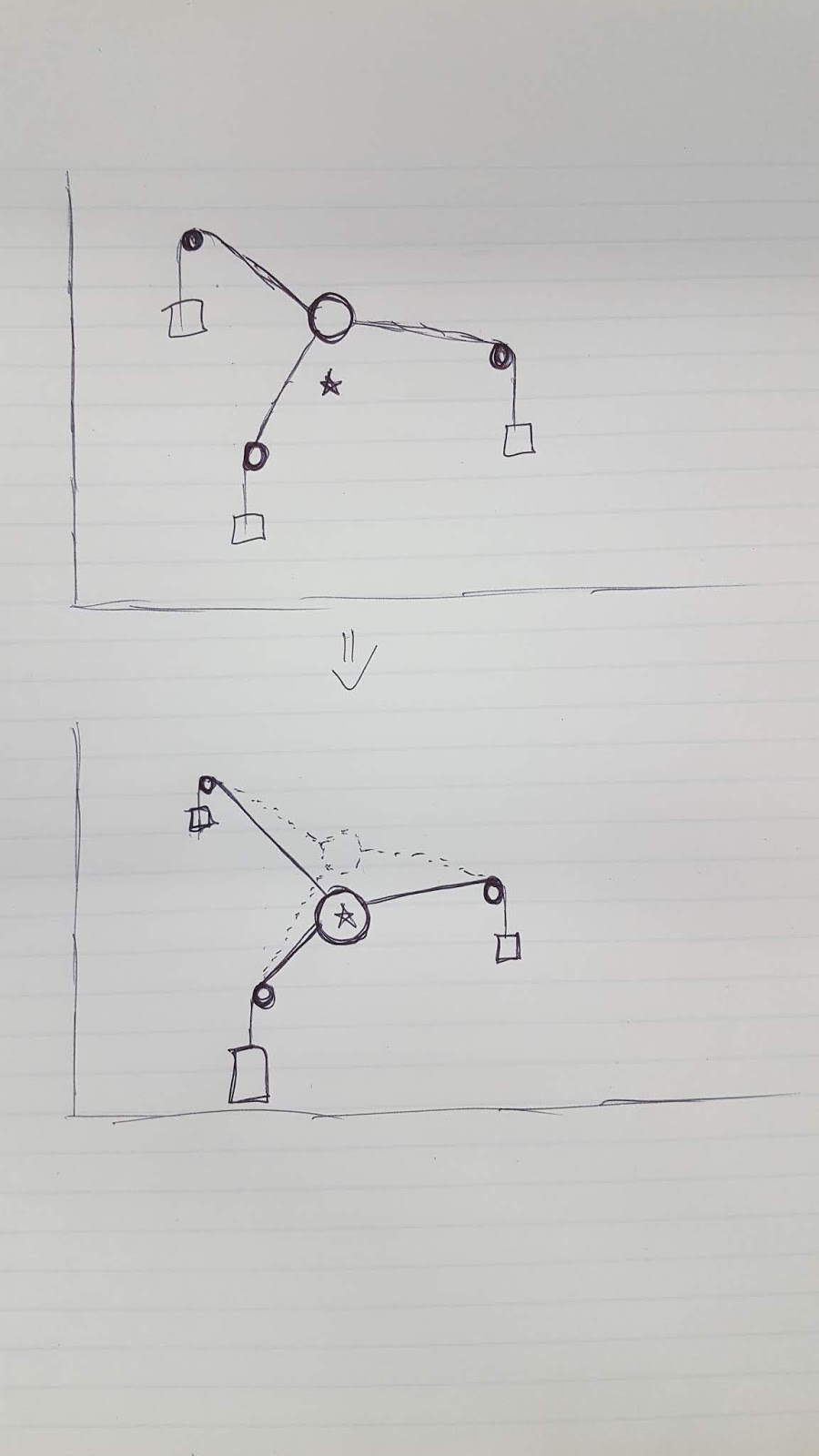

To apply this to the concept they were investigating, they start with the 2D example since it is easier to visualize, and will eventually lead to the same foundational concepts used for any n-dimensional case. They begin with a unit circle, and show how the tangent to every point around the circle accounts for every possible slope in this space and how any of those lines can be used to cut any shape in half by area.

The key to using the Borsuk-Ulam Theorem in this case is that they need to assign a value to that point on the circle. To do so, they use the distance between the two parallel lines that are cutting the two shapes in half. Because they want to find antipodal points which are equivalent, they create a signed distance system. The sign of the distance is based on the normal of the point on the unit circle. If that normal points towards shape #1, it is positive, and if it points towards shape #2, it is negative. They do this because there is only one case where the positive and opposite of a value is equal, and that’s when it is 0. This proves that the 0 value will exist, and a distance of 0 between the two lines indicates they are the same line cutting both shapes in half.

Summary

Honestly I was mostly just interested in the concept that there’s always at least 1 plane that can cut up to 3 3D objects in half, and was not fully connecting the dots on all the steps through the process for actually proving this to be true. Just learning about the Borsuk-Ulam Theorem in itself was very interesting, and seeing some of the unexpected cases it can be used to help prove was pretty eye opening. I did want to check it out because I thought it would be a fun concept to explore with a small Unity project to create a puzzle-like game centered around this concept, while also providing some background information on some of these concepts and theorems.

via Blogger http://stevelilleyschool.blogspot.com/2021/06/3d-math-always-single-plane-to-cut.html