April 15, 2021

Tutorial

Unreal

Title:

Unreal Engine 4.26 Beginner’s Tutorial: Make a Platformer Game

By:

DevAddict

Youtube – Tutorial

Description:

A large tutorial focusing on fleshing out the functionality of a given project and assets in Unreal.

Summary

This was one of the tutorials from my “Intro to Unreal: Basics Tutorial Compilation” post. This ended up being a great introductory tutorial where the first half provides a lot of information on just the basics of moving around the Unreal editor and basic level building, and the second half delves into blueprints and how to read them, modify them, and creat your own. The focus is on building a 3D platformer with an Unreal learning project that is provided, and most of the basics for that type of game creation are all covered here.

Notes and Lessons Learned from the Tutorial

Keyboard Shortcuts

End (while moving an actor): drops that actor directly to the ground plane below it

Ctrl + W (In Blue Print editor): duplicates currently selected nodes/node system

Editing a Static Mesh

Opening Mesh in Mesh Editor:

With a static mesh selected in your level, you can double click the Static Mesh component in the Details tab to open that mesh in the Mesh Editor.

Collision:

The Collision dropdown (Show Collision button) near the top of the Mesh Editor allows for visualizing the collider(s). If nothing appears, your mesh is probably missing colliders.

Auto-Collider Generator and K-DOP

The Collision tab further up can be used to create and apply simple colliders quickly. The K-DOP collider creater is a “type of bounding volume that basically takes K axis-aligned planes and pushes them as close to the mesh as it can, where K is the number of planes.” So 6DOP presses 6 planes against the mesh, and 18DOP pushes 18 planes against the mesh for example.

The Auto Convex Collision option opens the Convex Decomposition tab, which is used to create more complex meshes that more accurately represent the surface of the Static Mesh. The Collision properties of the mesh area found in the Details tab within the Mesh Editor. Here are many of the important collision options.

Collision Presets:

This is similar to the concept of using layers for collision in Unity, where you can determine what this collider actually interacts with. A common choice is “BlockAll” for environmental obstacles as this will cause it to collide with everything.

Editor Settings

Change Editor Camera Position Exiting Play Mode:

I really did not like that when exiting play mode for quick testing that the editor camera stayed in the exact same position as the player camera when I ended play mode (so basically the camera appears to not change at all when leaving play mode). I preferred if the editor camera returned to where I had it positioned before entering play mode to test, and found that there is a setting in Editor Preferences for this case.

Editor Preferences -> Level Editor: Viewports -> Look and Feel -> Uncheck “Use Camera Location from Play-In-Viewport”

Pain Causing Volumes

Pain Causing Volumes can be used to create a death plane in your game if the player falls into a pit or on some other dangerous floor. This is can be found in the “Place Actors” tab.

Setting Up a Physics Object

There is a Physics section in the Details tab of many actors and meshes. Turn on “Simulate Physics” here. Then under the Collision section, the Collision Preset “PhysicsActor” can be used, along with the Object Type “PhysicsBody”.

Game Mode

This is a section found in the World Settings. Most projects will have a GameMode Override used (the default for a new project is usually none however).

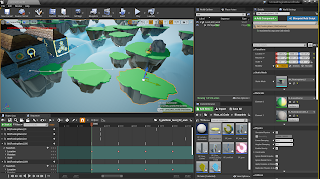

Level Sequencer

The Level Sequencer is its own window to help control the timeline of events and animations of objects throughout the level. In this tutorial, it was used to help control the infinite periodic movement of moving platforms.

Adding Actors to Level Sequencer:

Within the Sequencer window, go to “+ Track” and hover “Actor to Sequencer”. From here, a list of all possible actors comes up which can be added. You can also select an actor before this, and it will appear at the top of the list to make it easy to quickly add the currently selected actor to the Sequencer.

Widgets

Widgets are elements which make up the HUD or UI of the screen of the game.

Editing Text:

By default, text objects are not variables and are expected to be relatively static. If you want to have text that updates, like a collection counter of some kind, you need to make sure in the Widget Designer to check that a specific Text “Is Variable”, which can be found in the Details window. This appears to be similar to making a public text variable that is accessible in your blueprints.

Input Settings

You can get to the Input Settings through:

Edit -> Project Settings -> Engine-Input

Again, this is similar to the Input Settings in Unity where specific names or labels can be given to different actions which can then be tied to specific key bindings or joystick inputs. These names/labels can then be used in blueprints with nodes such as the InputAction node.

This approach is good to keep your inputs more organized. Your blueprints will be clearer since the name of the action will be there instead of arbitrary inputs like key “K”, and this makes it easier to modify inputs later if you want to change keybindings.

Blueprints Notes from Tutorial

This section covers many of the blueprints related notes that are given throughout the tutorial.

Within a Blue Print, in the Event Graph, some events can be added directly to components by selecting options presented in the Details tab for the currently selected component. For example, this tutorial starts with the checkpoint blue print and its sphere collider uses one of these events named “On Component Begin Overlap”. Selecting this immediately creates an event node in your event graph of that type referencing the currently selected component.

A lot of important information for your blue print can be found in the “My Blueprint” tab. This displays information such as the various functions and variables throughout the current blueprint.

Components can be dragged into the Event Graph from the Component tab to quickly use them as references for your blueprint.

Ctrl + W (In Blue Print editor): duplicates currently selected nodes/node system

Finding Variable References:

Select a variable under “My Blueprint” and right click and select “Find References”. This generates a list of references at the bottom of the editor which shows everywhere that variable is used. These can then be double-clikced to directly take the user to that specific reference.

Splitting Pins:

Some pins can be split into their more basic components in blueprints when necessary. This is done by right-clicking DIRECTLY on a pin, and selecting “Split Strcut Pin”. The example seen in this tutorial split a Transform pin so it then became 3 separate pins: position, rotation, and scale. These can then separately be connected into other pins.

IsValid Node:

Determines if input is valid or not, and performs functions based on the result. This can be used as a way to perform null reference checks to make sure to only do something as long as an input even exists or not.

PrintString Node:

Similar to the Debug.Log method in Unity, can be used to determine notes to be output as string data to check and debug blueprint maps.

DestroyActor Node:

Similar to the DestroyGameObject method within Unity, this is a quick and dirty way to remove something from existence in Unreal.

Auto Finding Proper Data from Variables Between Pins:

Sometimes you can drag a pin of one variable/class type to a pin of another variable type and it will add inbetween nodes to get a variable of the appropriate type for you that is contained within that class. For example, when connecting the actor pin of the OnComponentBeginOverlap node to a PrintString node’s string pin, it will add a GetDisplayName node in between to make sure it is passing the string data of the name of the object of interest into the PrintString node.

Casting Actors for More Detailed Variable Modification:

Many nodes work with Actors in blueprints, but often you need to adjust variables within deeper classes. When you need to start with a general node, but access a class inheriting from Actor, you can use a “Cast To …” node to get access to the proper tools. After casting to your designated class, it is then possible to modify the variables and values within that class.

The example from the tutorial is that the BP_jumpBoost wants access to the player character to modify their jump value. Since this is done when they collect the powerup, it starts with an OnComponentBeginOverlap node. This can return the other actor that collided with it, but they then need more detailed information to modify the jump value of the player character that collided with it than that it is just an Actor. This is done by a CastToEpicCharacter node that receives the OtherActor pin data from the OnComponentBeginOverlap node. This converts it to a more specific class, where the jump velocity can then be set as wanted.

This tutorial then suggests that using interfaces is another way to achieve a similar goal in these situations. They even prefer interfaces, but both appear to be valid approaches.

Adding Variables:

1) They can be added under the My Blueprint window within the blueprint

2) Select a variable pin, right-click, and select “Promote to Variable” (similarly to Houdini)

Variables in Details Window:

Displaying:

This can quickly be done by making the variable public. This can be done by clicking the eye icon next to the variable in your blueprint, making sure it displays an open eye (indicating the variable is now public).

Organizing:

Similar to having headers in Unity to group variables, Unreal does this with Categories. Just select the variable in your blueprint, and the Details window has a section named Category where a dropdown shows all the previously made Categories this variable can be placed in. If you want to make a new category to place the variable into, just type a new name there.

Controlling Variables:

Similar to Unity, public variables within the blueprints can also be given limits and controls for better usability in the Details window. For example, you can add a slider range to a variable which creates a slider in the Details window which can be dragged within the given minimum and maximum values.

Branch Node:

Foundational conditional statement for blueprints to do various events if true and/or false

Shortcut: Hold B + Left-Click (in Event Graph)

OnComponentBeginOverlap and OnComponentEndOverlap nodes:

These are similar to the OnCollision… Enter and Exit methods within Unity. They help determine what actions to take when something enters a collider, and what actions to take when leaving a collider.

Solely entering a collider is common when collecting objects, whereas entering and exiting a collider is common when certain actions by the player can only be performed when they are with a certain proximity to an object (such as being able to open a door), since you need to allow the object to receive input from the player while they are close, but then again stop receiving input once the player gets too far away.

Sequence Node:

Used to sequentially perform a number of methods in a designated order.

Making Your Own Blueprint; Common Components:

Static Mesh & Collider (of some type)

Creating Events and Functions:

Similar to the events and functions already present in Unreal, you can also create your own custom versions of these. These can then be called from other locations as long as they have a reference to the overall class, similar to calling methods of other classes in Unity or programming.

Fig. 1: Image from my Platform Setup with Sequencer in Tutorial

Summary

I think this was a very solid tutorial overall that made me feel much more confident in working with Unreal, especially when it comes to interacting with the blueprint system. I am starting to be able to draw parallels between Unreal’s blueprints and the code used to perform similar actions in Unity which is helping me start to understand adding functionality to objects in Unreal. One major difference is just that Unreal already offers so much to the designer immediately upon creating a new project that will just require experience to learn what is already there. For example, blueprints like those for the background game mode do a lot of work you might create for a game manager class or something similar in a Unity project.

Blueprints are also just so numerous that finding what you want as a beginner to the system can be too overwhelming to be practical. Simply having more experience helps understand the more commonly used ones however, like collider overlaps and branches, so I think just a few more tutorials will allow me to start building my own small projects.

via Blogger http://stevelilleyschool.blogspot.com/2021/04/unreal-tutorial-unreal-engine-426.html